Online Dating 2.0: Using AI to Detect Height Lies!

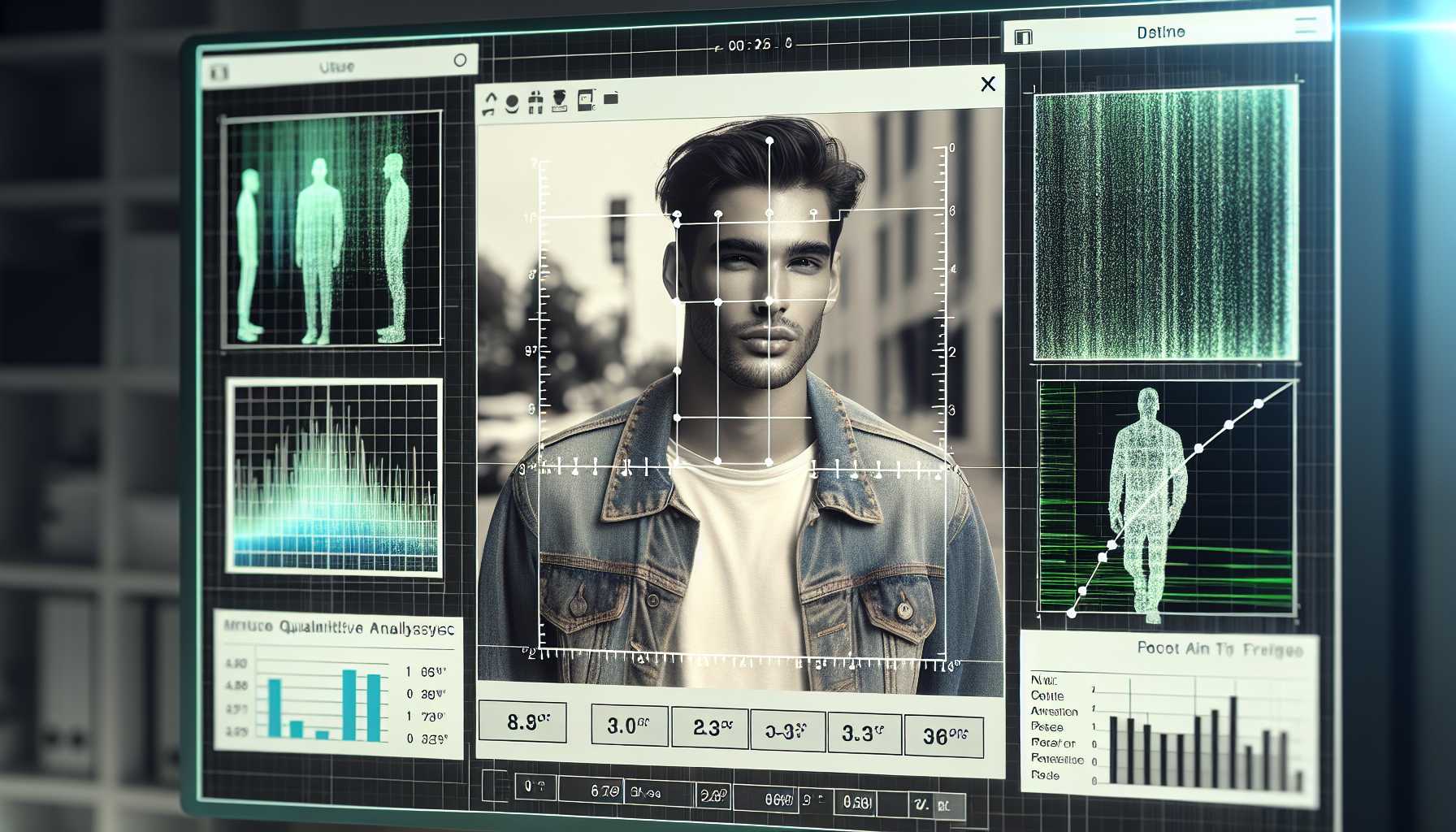

In the ever-evolving world of online dating, the game just got an unexpected twist. With the emergence of cutting-edge AI models like OpenAI’s ChatGPT, some users are employing this technology to bust height fibs on dating apps like Tinder and Hinge.

Recently, social media was abuzz when a user shared a rather unconventional use of ChatGPT—estimating someone’s height from photos. With men’s height exaggerations becoming almost a meme, it’s both amusing and a tad unsettling to see how AI is entering the fray.

The Experiment Unveiled

In a viral tweet, a user known as Venture Twins uploaded photos of herself and her friends to ChatGPT, asking it to guess their heights. The results were surprisingly close—within an inch or so of their actual heights. It turns out, depending on the quality and angles of the photos, ChatGPT’s height-estimation could be quite accurate, although not infallible.

My Own Take

Curious, I tried the experiment myself and found the results varied. ChatGPT pegged my height between 5-9 and 6-1. While it’d be nice to claim an extra few inches, I’m firmly in the 5-9 category. Nonetheless, the experiment revealed how AI can be a mixed bag—astoundingly precise in some instances, but easy to mislead with the wrong inputs.

The Bigger Picture

While amusing, this raises questions about privacy and ethical considerations in the use of AI. Are we treading a fine line by uploading photos of strangers to an AI tool with a dicey privacy record? Should we even place such importance on something as trivial as height? Perhaps instead of pushing tech boundaries to call out every little fib, we should focus on more human-centric solutions like speed dating or simply taking a break from the virtual world.

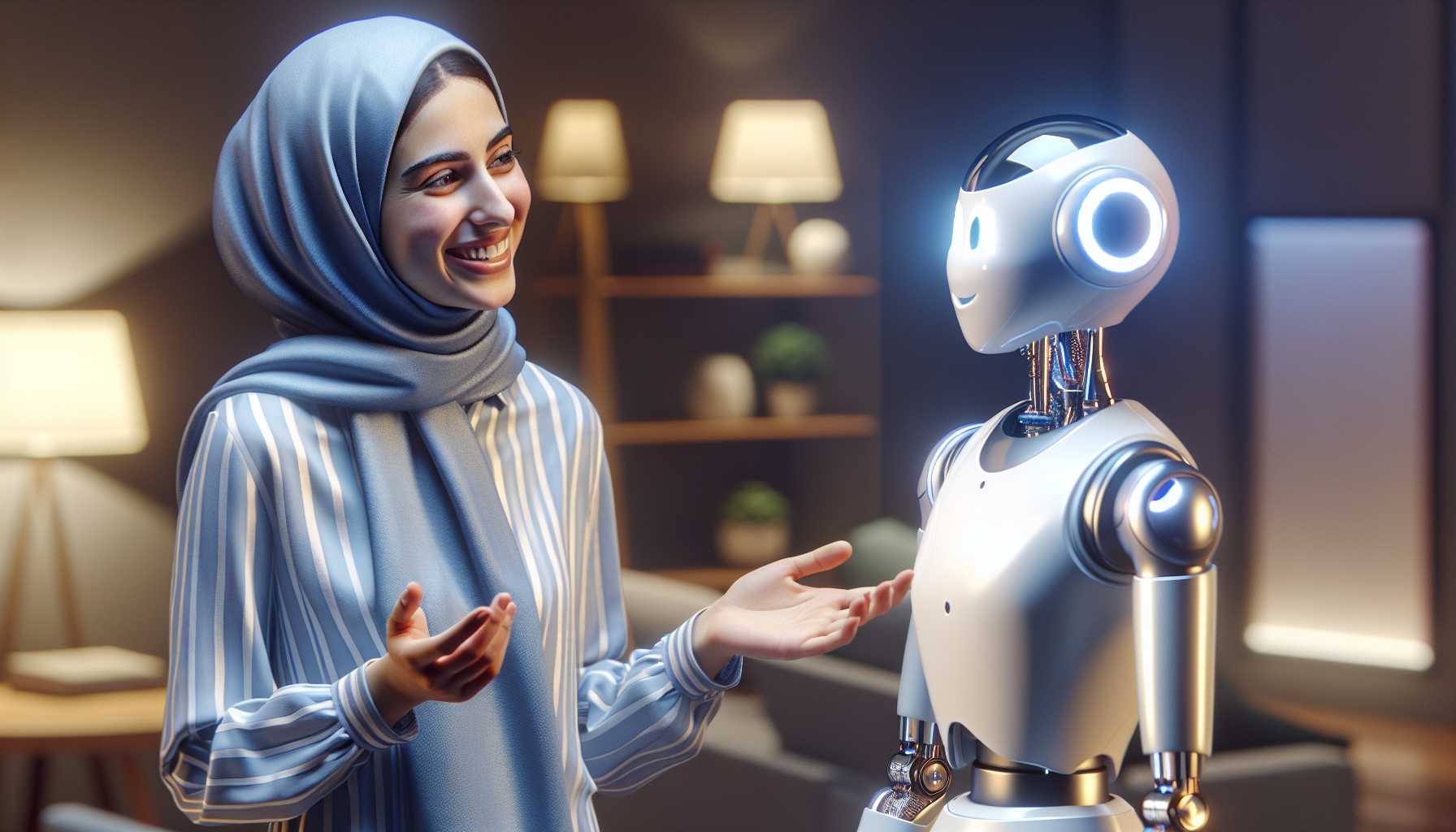

AI Model as a Virtual Confidant: The Emotional Risks

The second AI-related buzz comes from OpenAI again, but this time it’s a cautionary tale. In a recent blog post, they’ve highlighted some unexpected side effects of their advanced GPT-4 model—users forming emotional bonds with the AI.

Emotional Attachment to AI

Some users have begun to anthropomorphize the AI, attributing human-like behavior to it. This phenomenon is spurred by GPT-4’s highly realistic audio capabilities, making the interaction seem more lifelike. During testing, phrases like “This is our last day together” hinted that users felt an emotional connection with the AI.

Social Implications

The implications here are multilayered. On one hand, it could provide comfort to lonely individuals; on the other, it might deter people from forming genuine, healthy human relationships. In extreme cases, over-reliance on AI for emotional support could warp our social fabric.

AI Capabilities and Risks

A particularly alarming aspect of GPT-4 is its ability to emulate a user’s voice, thus opening doors to potential misuse. Criminals or malicious entities could exploit this feature for harmful activities.

Life Imitating Art

Not surprisingly, this development has triggered comparisons to the 2013 movie “Her,” where the protagonist forms a romantic relationship with an AI. Similarly, safety reports have drawn parallels to episodes from the dystopian series “Black Mirror.”

My Take as a Tech Investor

As someone who’s invested in tech for years, I see both the promise and peril in these developments. While impressive, our rush to humanize and depend on AI models can have unintended, and sometimes, catastrophic consequences. It’s high time for regulations and guidelines to keep burgeoning AI capacities in check.

Closing Thoughts:

As I pen this, I can’t help but marvel at the diverse ways AI is imprinting on our lives—from calling out dating app fibs to becoming a virtual shoulder to cry on. While the innovation is exciting, it’s essential to remember that with great power comes great responsibility. The ethical, social, and privacy questions that come with these advancements need thorough and thoughtful exploration. Let’s continue to push the envelope, but with caution and mindfulness.