OpenAI’s ChatGPT Revamps Content Moderation: A Farewell to Orange Warnings

In the ever-evolving world of artificial intelligence, keeping up with platform updates and policy changes can feel like trying to catch a runaway train. This week, OpenAI, the trailblazers in AI research and deployment, have once again made headlines by fine-tuning how their ChatGPT handles content moderation. If you’re wondering why your spicy chat didn’t receive a flashing orange warning, it’s because OpenAI is attempting to foster a more open dialogue with its users. Here’s how they’re shaking things up.

The Orange Box Exit

OpenAI’s recent update has made the once-ubiquitous orange warning boxes vanish into the digital ether. These warnings used to flag users who potentially violated content policies, serving as a reminder that a line may have been crossed. While critics argue these warnings were tantamount to arbitrary censorship, OpenAI insists this change merely pertains to communication of their content policies, not an alteration in the model’s responses. As a passionate advocate for transparent AI practices, I see this as an indication of OpenAI’s commitment to fostering a more interactive user experience.

A Call to Action: Sharing Experiences

Model behavior leads, like Laurentia Romaniuk and Joanne Jang, have actively called for users to share their experiences where content moderation seemed unnecessary or excessive. This engagement is crucial in calibrating the model to better manage controversial content. This feedback loop reminds me of the iterative process intrinsic to tech innovation, emphasizing a customer-centric approach to improvements. By genuinely incorporating user input, OpenAI signals its dedication to making strides towards balancing safety and intellectual freedom.

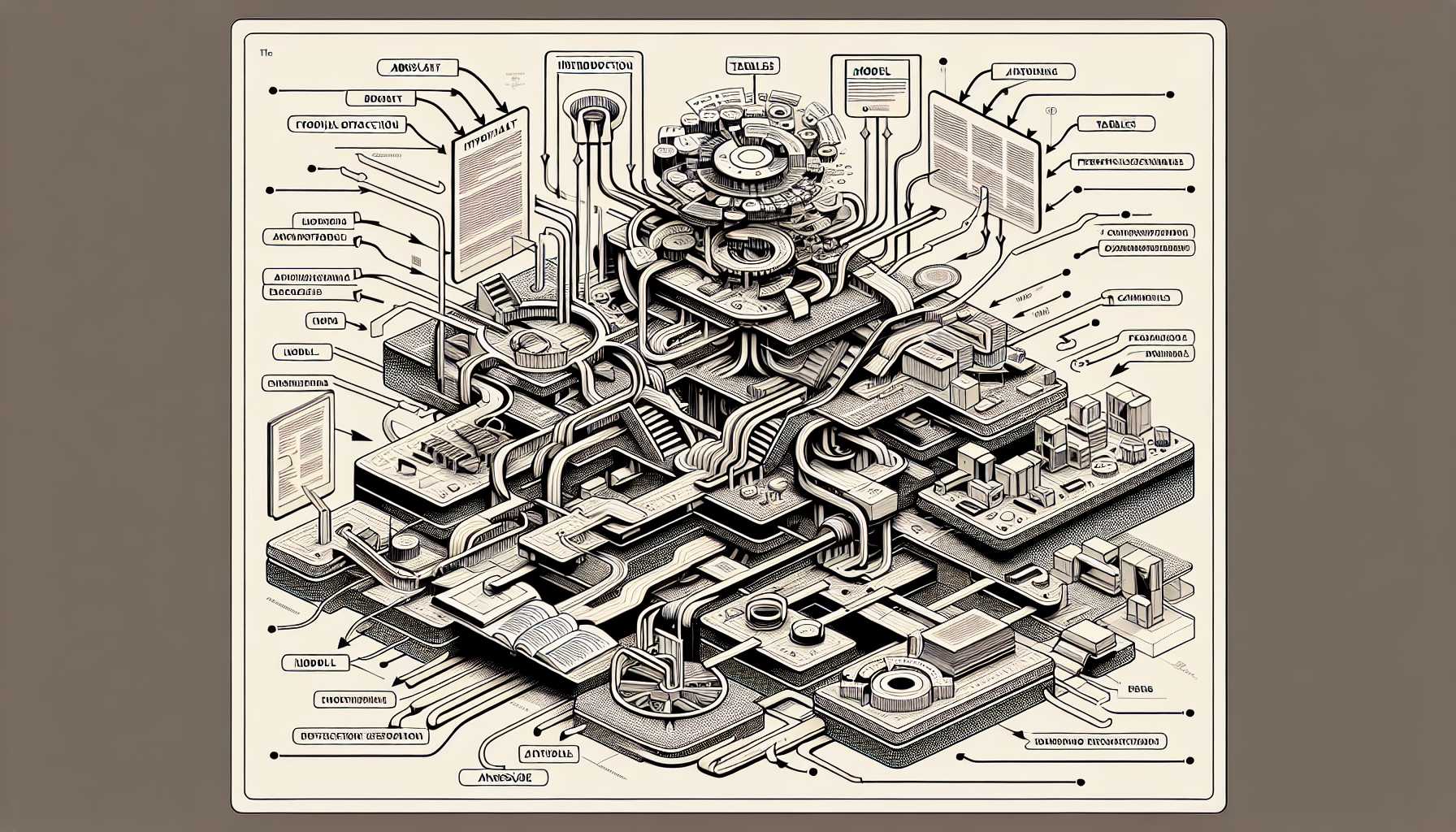

Decoding the New Model Spec

Embarking on a herculean revamp of their Model Spec, OpenAI has constructed a compendium that contours its approach to responding to user requests. This revised documentation adds layers to controversial issue-handling by delineating OpenAI’s stance on a multitude of topics including political discussions and copyrighted content. This document doesn’t just affirm OpenAI’s vision for safety but peers into the labyrinthine corridors of content moderation—a balanced act of enabling discourse while mitigating harm.

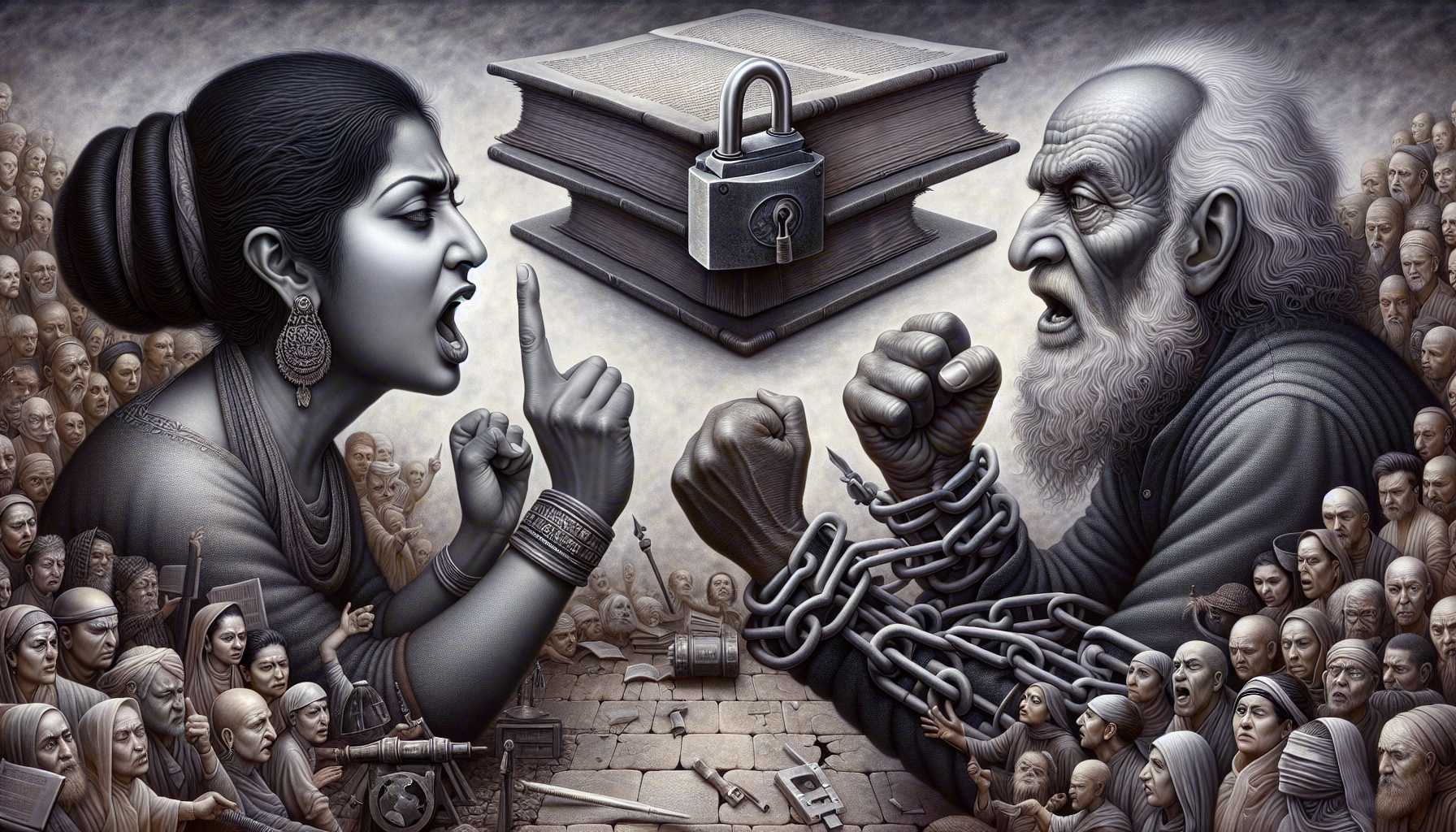

The Censorship Debate: A Delicate Dance

ChatGPT’s alleged bias has been a hot topic, with industry heavyweights like President Trump’s “AI Czar” David Sacks claiming the model subscribes to a “woke” agenda. However, OpenAI counters this by reiterating its belief in intellectual freedom. As a tech entrepreneur, it’s fascinating to watch these dialogues unfold, for they’re pivotal in understanding and navigating the socio-political landscape of AI ethics. The tug-of-war between open discussion and responsible moderation remains a continual dance, reminding us—all too crucially—of the importance of maintaining transparency in these AI systems.

Forward into the Future: The Human Element

As AI continues to ripple through digital landscapes, understanding how these tools reflect, challenge, and shape our world will be paramount. OpenAI’s removal of the orange warnings is no small concession but symbolically represents the shifting sands of how user feedback and AI responsibilities coexist. It ushers in a future where AI moderation learns not in isolation, but in friendly cahoot with humanity. A blend of logic and learning signifies the quintessence of this technological development, and we can only expect further refinement in the dialogues and boundaries AI will navigate. As someone deeply enmeshed in technology investment, I’m eager to see how this harmony between accuracy in responses and creative dialogue will enhance AI experiences in the months and years to come. If that means my personal spicy chats stay sotto voce, so be it.